python 无疑是当下火上天的语言,但是我们又不拿来工作,那么能拿来干啥呢?我是这么干的。

1. 平时工作开发用不上,就当个计算器吧!

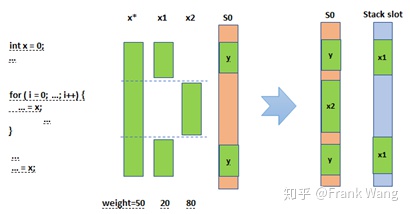

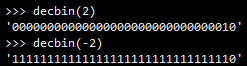

python # 加减乘除 >>> (3 + 2) - 5 * 1 5 # 位运算 >>> 3 << 2 12 # x ^ y 幂次方运算,不能开方运算 >>> 3 ** 2 9 # 用另一种计算幂次方的运算,可以开方运算 >>> pow(9, 0.5) 3.0 # 作进制转换,如二进制转换,十进制转n进制 >>> bin(2) '0b10' >>> hex(25) '0x19' >>> oct(10) '012' >>> int('e0', 16) 224 # 将十进制转换为二进制,以全0占位形式显示二进制,更方便查看,默认为32位,使用如下图所示 def decbin(i, bit=32): return (bin(((1 << bit) - 1) & i)[2:]).zfill(bit)

2. 做简单爬虫

#!/usr/bin/python # -*- coding: UTF-8 -*- import urllib,urllib2 import re import os import HTMLParser dirbase = '/tmp' urlbase = 'http://hg.openjdk.java.net' url= urlbase + '/jdk8u/jdk8u/jdk/file/dddb1b026323/src' #/jdk,/hotspot skip_to_p = '' skip_find = False; textmod ={'user':'admin','password':'admin'} textmod = urllib.urlencode(textmod) print(url) req = urllib2.Request(url = '%s%s%s' % (url,'?',textmod)) res = urllib2.urlopen(req) res = res.read() alink = re.findall(r'<a',res) allflist = [] table=re.findall(r'<tbody class="stripes2">(.+)<\/tbody>',res, re.S) harr = re.findall(r'href="(/jdk8u[\w\/\._]+)">(?!\[up\])', table[0]) def down_src_recursion(harr): global allflist,skip_find; if(not harr): return False; i=0; arrlen = len(harr) lock_conflict_jump_max = 2; # 遇到文件锁时跳过n个文件,当前仍需跳过的文件数量 lock_conflict_jumping = 0; print("in new dir cur...") if(len(allflist) > 1500): print('over 1500, cut to 50 exists...') allflist = allflist[-800:] for alink in harr: i += 1; alink = alink.rstrip('/') if(skip_to_p and not skip_find): if(alink != skip_to_p): print('skip file, cause no find..., skip=%s,now=%s' % (skip_to_p, alink)) continue; else: skip_find = True; if(alink in allflist): print('目录已搜寻过:' + alink) continue; pa = dirbase + alink if(os.path.isfile(pa)): print('文件已存在,无需下载: ' + pa) continue; lockfile=pa+'.tmp' if(os.path.isfile(lockfile)): lock_conflict_jumping = lock_conflict_jump_max; print('文件正在下载中,跳过+%s...: %s' % (lock_conflict_jumping, lockfile))continue; else: if(lock_conflict_jumping > 0): lock_conflict_jumping -= 1; print('文件正在下载中,跳过+%s...: %s' % (lock_conflict_jumping, lockfile))continue; # 首先根据后缀把下载中的标识标记好,因为网络下载时间更慢,等下载好后再加标识其实已为时已晚 if(pa.endswith(('.gif','.jpg','.png', '.xml', '.cfg', '.properties', '.make', '.sh', '.bat', '.html', '.c','.cpp', '.h', '.hpp', '.java', '.1'))): os.mknod(lockfile); reqt = urllib2.Request(urlbase + alink) rest = urllib2.urlopen(reqt) rest = rest.read() allflist.append(alink) if(rest.find('class="sourcefirst"') > 0): print('这是个资源文件:%s %d/%d' % (alink, i, arrlen)) if(not os.path.isfile(lockfile)): os.mknod(lockfile); filename = alink.split('/')[-1] linearr = re.findall(r'<span id=".+">(.+)</span>', rest) fileObject = open(dirbase + alink, 'w') for line in linearr: try: line = HTMLParser.HTMLParser().unescape(line) except UnicodeDecodeError as e: print('oops, ascii convert error accour:', e) fileObject.write(line + '\r\n') fileObject.close() os.remove(lockfile); else: print('这是目录:%s %d/%d' % (alink, i, arrlen)) if(not os.path.exists(pa)): print('创建目录:%s' % alink) os.makedirs('/tmp' + alink, mode=0777) ta=re.findall(r'<tbody class="stripes2">(.+)<\/tbody>',rest, re.S) ha = re.findall(r'href="(/jdk8u[\w\/\._]+)">(?!\[up\])', ta[0]) down_src_recursion(ha) # go... down_src_recursion(harr);

以上python2 版本的爬虫,在python3中则要改编下呢!

#!/usr/bin/python # -*- coding: UTF-8 -*- # for python3 import urllib.parse import urllib.request import re import os import html dirbase = '/tmp' urlbase = 'http://hg.openjdk.java.net' url= urlbase + '/jdk8u/jdk8u/jdk/file/478a4add975b/src/share/classes/sun/misc' #skip_to_p = '/jdk8u/jdk8u/jdk/file/478a4add975b/src/share/classes/sun/misc' skip_to_p = '' skip_find = False; textmod ={'user':'admin','password':'admin'} textmod = urllib.parse.urlencode(textmod) print(url) res = urllib.request.urlopen(url = '%s%s%s' % (url,'?',textmod)) res = res.read().decode('utf-8') alink = re.findall(r'<a', res) allflist = [] table=re.findall(r'<tbody class="stripes2">(.+)<\/tbody>',res, re.S) harr = re.findall(r'href="(/jdk8u[\w\/\._]+)">(?!\[up\])', table[0]) def down_src_cur(harr): global allflist,skip_find; if(not harr): return False; i=0; arrlen = len(harr); print("- In new dir cur...") if(len(allflist) > 1500): print('- Over 1500, cut to 50 exists...') allflist = allflist[-800:] for alink in harr: i += 1; alink = alink.rstrip('/') if(skip_to_p and not skip_find): if(alink != skip_to_p): print('- Skip file, cause no find..., skip=%s,now=%s' % (skip_to_p, alink)) continue; else: skip_find = True; if(alink in allflist): print('- Searched before:' + alink) continue; rest=''; try: res = urllib.request.urlopen(urlbase + alink) rest = res.read().decode('utf-8') except Exception as e: print(e) print(" ERROR accour, continue;") continue; allflist.append(alink) if(rest.find('class="sourcefirst"') > 0): print('- Code resourse:%s %d/%d' % (alink, i, arrlen)) filename = alink.split('/')[-1] linearr = re.findall(r'<span id=".+">(.+)</span>', rest) fileObject = open(dirbase + alink, 'w') for line in linearr: fileObject.write(html.unescape(line) + '\r\n') fileObject.close() else: pa = dirbase + alink print('- Directory:%s %d/%d' % (alink, i, arrlen)) if(not os.path.exists(pa)): print('makedirs:%s' % alink); os.makedirs('/tmp' + alink, mode=0o777 ); ta=re.findall(r'<tbody class="stripes2">(.+)<\/tbody>',rest, re.S) ha = re.findall(r'href="(/jdk8u[\w\/\._]+)">(?!\[up\])', ta[0]) # 递归爬取解析 down_src_cur(ha) down_src_cur(harr);

做文件搜索,替换:

4. 做简单代码验证

# 做简单字符查找验证 >>> '234234fdgdfs'.find('f') 6 >>> '234234fdgdfs'.index('f') 6 >>> '234234fdgdfs'[2:5] '423' # 做正则匹配 >>> re.findall(r'[a-zA-Z0-9]*\.[a-zA-Z1-9]*[\.|com]*', 'www.baidu.com') ['www.baidu.com']

5. 写个运维脚本,监听本机8080端口的运行状态,如果发现挂了,就发送邮件通知主人,并重启服务器。

#!/usr/bin/env python #!coding=utf-8 import os import time import sys import smtplib from email.mime.text import MIMEText def send_email (warning): msg = MIMEText(warning) msg['Subject'] = 'python send warning mail' msg['From'] = '测试了<rootrr@163.com>' try: smtp = smtplib.SMTP() to_mail = 'xx@163.com' from_mail = 'xx@163.com' smtp.connect(r'smtp.qiye.163.com') smtp.login('xx@163.com', 'xxx123') smtp.sendmail(from_mail, to_mail, msg.as_string()) smtp.close() print('send mail to %s, content is: %s' % (to_mail, msg)) except Exception as e: print("Send mail Error: %s" % e) # 监听状态中。。。 while True: http_status = os.popen('netstat -tulnp | grep ":8080"','r').readlines() try: if http_status == []: os.system('service tomcat7 start') time.sleep(3) # 等待启动 new_http_status = os.popen('netstat -tulnp | grep ":8080"','r').readlines() str1 = ''.join(new_http_status) is_port = -1; send_email(warning = "8080 port shutdown, This is a warning!!!") # 发送通知 try: is_port = str1.split()[3].split(':')[-1] except IndexError, e: print("out of range:", e) if is_port != '8080': print 'tomcat 启动失败' else: print 'tomcat 启动成功' else: print '8080端口正常' time.sleep(5) except KeyboardInterrupt: sys.exit('out order\n')

6. 科学计算,大数据,图形识别。。。

看工作需要!

以下命令为反向kill某个端口的服务

# netstat -tunlp | grep ':8080' | awk '{split($7, arr, "/"); print(arr[1])}' | kill -9